mcsm-benchs: Creating benchmarks of MCS Methods

We introduce a public, open-source, Python-based toolbox for benchmarking multi-component signal analysis methods, implemented either in Python or Matlab.

The goal of this toolbox is providing the signal-processing community with a common framework that allows researcher-independent comparisons between methods and favors reproducible research.

With the purpose of making this toolbox more useful, the methods to compare, the tests, the signal generation code and the performance evaluation functions were conceived as different modules, so that one can modify them independently. The only restriction this pose is that the methods should satisfy some requirements regarding the shape of their input and output parameters.

On the one hand, the tests and the performance evaluation functions, are encapsulated in the class Benchmark. On the other hand, the signals used in this benchmark are generated by the methods in the class SignalBank.

In order to compare different methods with possibly different parameters, we need to set up a few things before running the benchmark. A Benchmark object receives some input parameters to configure the test:

task: This could be'denoising'or'detection','component_denoising'or'inst_frequency'. The first one compute the quality reconstruction factor (QRF) using the output of the method, whereas the second simply consist in detecting whether a signal is present or not. Finally,'component_denoising'compares the QRF component wise, and ‘inst_frequency'computes the mean squared error between estimations of the instantaneous frequencyN: The length of the simulation, i.e. how many samples should the signals have.methods: A dictionary of methods. Each entry of this dictionary corresponds to the function that implements each of the desired methods.parameters: A dictionary of parameters. Each entry of this dictionary corresponds to iterator with positional and/or keyword arguments. In order to know which parameters should be passed to each method, the keys of this dictionary should be the same as those corresponding to the individual methods in the corresponding dictionary. An example of this is showed in below.SNRin: A list or tuple of values of SNR to test.repetitions: The number of times the experiment should be repeated with different realizations of noise.signal_ids: A list of signal ids (corresponding to the names of the signal in the class ‘SignalBank’) can be passed here in order to test the methods on those signals. Optionally, the user can pass a dictionary where each key is used as an identifier, and the corresponding value can be a numpy array with a personalized signal.

A dummy test

First let us define a dummy method for testing. Methods should receive a numpy array with shape (N,) where N is the number of time samples of the signal. Additionally, they can receive any number of positional or keyword arguments to allow testing different combinations of input parameters. The shape of the output depends on the task (signal denoising or detection). So the recommended signature of a method should be the following:

output = a_method(noisy_signal, *args, **kwargs).

If one set task='denoising', output should be a (N,) numpy array, i.e. the same shape as the input parameter noisy_signal, whereas if task='detection', the output should be boolean (0 or False for no signal, and 1 or True otherwise).

After this, we need to create a dictionary of methods to pass the Benchmark object at the moment of instantiation.

[20]:

import numpy as np

from numpy import pi as pi

import pandas as pd

from matplotlib import pyplot as plt

from mcsm_benchs.Benchmark import Benchmark

from mcsm_benchs.ResultsInterpreter import ResultsInterpreter

from mcsm_benchs.SignalBank import SignalBank

from utils import spectrogram_thresholding, get_stft

Creating a dictionary of methods

Let’s create a dictionary of methods to benchmark. As as example, we will compare two strategies for spectrogram thresholding. The first one is hard thresholding, in which the thresholding function is defined as: The second one is soft thresholding, here defined as:

These two approaches are implemented in the python function thresholding(signal, lam, fun='hard') function, which receives a signal to clean, a positional argument lam and a keyword argument fun that can be either hard or soft.

Our dictionary of methods will consist then in two methods: hard thresholding and soft thresholding. For both approaches, let’s use a value of lam=1.0 for now.

[21]:

def method_1(noisy_signal, *args, **kwargs):

# If additional input parameters are needed, they can be passed in a tuple using

# *args or **kwargs and then parsed.

xr = spectrogram_thresholding(noisy_signal,1.0,fun='hard')

return xr

def method_2(noisy_signal, *args, **kwargs):

# If additional input parameters are needed, they can be passed in a tuple using

# *args or **kwargs and then parsed.

xr = spectrogram_thresholding(noisy_signal,2.0,fun='soft')

return xr

# Create a dictionary of the methods to test.

my_methods = {

'Method 1': method_1,

'Method 2': method_2,

}

The variable params in the example above allows us to pass some parameters to our method. This would be useful for testing a single method with several combination of input parameters. In order to do this, we should give the Benchmark object a dictionary of parameters. An example of this functionality is showed in the next section. For now, lets set the input parameter parameters = None.

Now we are ready to instantiate a Benchmark object and run a test using the proposed methods and parameters. The benchmark constructor receives a name of a task (which defines the performance function of the test), a dictionary of the methods to test, the desired length of the signals used in the simulation, a dictionary of different parameters that should be passed to the methods, an array with different values of SNR to test, and the number of repetitions that should be used for each test.

Once the object is created, use the class method run_test() to start the experiments.

Remark 1: You can use the ``verbosity`` parameter to show less or more messages during the progress of the experiments. There are 6 levels of verbosity, from ``verbosity=0`` (indicate just the start and the end of the experiments) to ``verbostiy = 5`` (show each method and parameter progress)

Remark 2: Parallelize the experiments is also possible by passing the parameter ``parallelize = True``.

[22]:

benchmark = Benchmark(task = 'denoising',

methods = my_methods,

N = 256,

SNRin = [10,20],

repetitions = 1000,

signal_ids=['LinearChirp', 'CosChirp',],

verbosity=0,

parallelize=False)

benchmark.run_test() # Run the test. my_results is a dictionary with the results for each of the variables of the simulation.

benchmark.save_to_file('saved_benchmark') # Save the benchmark to a file.

Method run_test() will be deprecated in newer versions. Use run() instead.

Running benchmark...

0%| | 0/2 [00:00<?, ?it/s]100%|██████████| 2/2 [00:00<00:00, 2.23it/s]

100%|██████████| 2/2 [00:00<00:00, 2.32it/s]

[22]:

True

[23]:

same_benchmark = Benchmark.load_benchmark('saved_benchmark') # Load the benchmark from a file.

Now we have the results of the test in a nested dictionary called my_results. In order to get the results in a human-readable way using a DataFrame, and also for further analysis and reproducibility, we can use the class method get_results_as_df().

[24]:

results_df = benchmark.get_results_as_df() # This formats the results on a DataFrame

results_df

[24]:

| Method | Parameter | Signal_id | Repetition | 10 | 20 | |

|---|---|---|---|---|---|---|

| 2000 | Method 1 | ((), {}) | CosChirp | 0 | 11.886836 | 22.377151 |

| 2001 | Method 1 | ((), {}) | CosChirp | 1 | 12.722813 | 22.764552 |

| 2002 | Method 1 | ((), {}) | CosChirp | 2 | 12.397020 | 22.369574 |

| 2003 | Method 1 | ((), {}) | CosChirp | 3 | 11.548922 | 22.221890 |

| 2004 | Method 1 | ((), {}) | CosChirp | 4 | 12.083445 | 22.151975 |

| ... | ... | ... | ... | ... | ... | ... |

| 1995 | Method 2 | ((), {}) | LinearChirp | 995 | 6.838226 | 17.240144 |

| 1996 | Method 2 | ((), {}) | LinearChirp | 996 | 6.713695 | 18.924030 |

| 1997 | Method 2 | ((), {}) | LinearChirp | 997 | 6.418767 | 18.474438 |

| 1998 | Method 2 | ((), {}) | LinearChirp | 998 | 6.711867 | 18.170891 |

| 1999 | Method 2 | ((), {}) | LinearChirp | 999 | 7.773640 | 19.012253 |

4000 rows × 6 columns

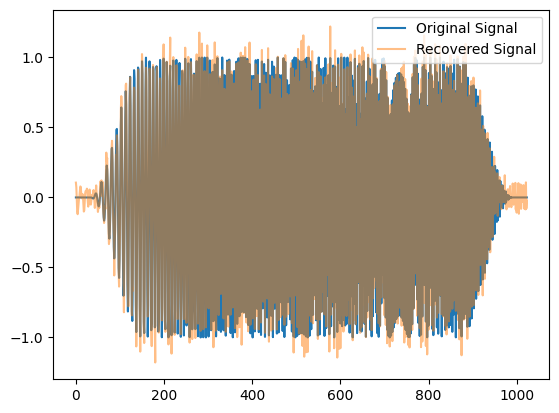

[25]:

sb = SignalBank(N=1024)

s = sb.signal_linear_chirp()

noise = np.random.randn(1024)

x = Benchmark.sigmerge(s, noise, 15)

xr = spectrogram_thresholding(x,1.0,'hard')

fig,ax = plt.subplots(1,1)

ax.plot(s,label='Original Signal')

ax.plot(xr,alpha=0.5,label='Recovered Signal')

ax.legend()

[25]:

<matplotlib.legend.Legend at 0x7cd6a0114fe0>

As we can see, the DataFrame show the results ordered by columns. The first column corresponds to the method identification, and the values are taken from the keys of the dictionary of methods. The second column enumerates the parameters used (more on this on the next section). The third column corresponds to the signal identification, using the signal identification values from the SignalBank class. The next column shows the number of repetition of the experiment. Finally, the remaining

columns show the results obtained for the SNR values used for each experiment. Since task = 'denoising', these values correspond to the QRF computed as QRF = 10*np.log10(E(s)/E(s-sr)), where E(x) is the energy of x, and s and sr are the noiseless signal and the reconstructed signal respectively.

Passing different parameters to the methods.

It is common that a method depends on certain input parameters (thresholds, multiplicative factors, etc). Therefore, it would be useful that the tests could also be repeated with different parameters, instead of creating multiple versions of one method. We can pass an array of parameters to a method provided it parses them internally. In order to indicate the benchmark which parameters combinations should be given to each method, a dictionary of parameters can be given.

Let us now create this dictionary. The parameters combinations should be given in a tuple of tuples, so that each internal tuple is passed as the additional parameter (the corresponding method, of course, should implement how to deal with the variable number of input parameters). For this to work, the keys of this dictionary should be the same as those of the methods dictionary.

We can now see more in detail how to pass different parameters to our methods. For instance, let’s consider a function that depends on two thresholds thr1 (a positional argument) and thr2 (a keyword argument):

Now let us create a method that wraps the previous function and then define the dictionary of methods for our benchmark. Notice that the method should distribute the parameters in the tuple params.

[26]:

def method_1(noisy_signal, *args, **kwargs):

# If additional input parameters are needed, they can be passed in a tuple using

# *args or **kwargs and then parsed.

xr = spectrogram_thresholding(noisy_signal,*args,**kwargs)

return xr

# aaa = np.random.rand()

# if aaa>0.5:

# return True

# else:

# return False

def method_2(noisy_signal, *args, **kwargs):

# If additional input parameters are needed, they can be passed in a tuple using

# *args or **kwargs and then parsed.

# print(np.std(noisy_signal))

xr = spectrogram_thresholding(noisy_signal,*args,**kwargs)

return xr

# aaa = np.random.rand()

# if aaa>0.2:

# return True

# else:

# return False

# Create a dictionary of the methods to test.

my_methods = {

'Method 1': method_1,

'Method 2': method_2,

}

Having done this, we can define the different combinations of parameters using the corresponding dictionary:

[27]:

# Create a dictionary of the different combinations of thresholds to test.

# Remember the keys of this dictionary should be same as the methods dictionary.

my_parameters = {

'Method 1': [((thr,),{'fun': 'hard',}) for thr in np.arange(1.0,4.0,1.0)],

'Method 2': [((thr,),{'fun': 'soft',}) for thr in np.arange(1.0,4.0,1.0)],

}

print(my_parameters['Method 1'])

[((np.float64(1.0),), {'fun': 'hard'}), ((np.float64(2.0),), {'fun': 'hard'}), ((np.float64(3.0),), {'fun': 'hard'})]

So now we have four combinations of input parameters for another_method(), that will be passed one by one to the method so that all the experiments will be carried out for each of the combinations. Let us set the benchmark and run a test using this new configuration of methods and parameters. After that, we can use the Benchmark class method get_results_as_df() to obtain a table with the results as before:

[28]:

benchmark = Benchmark(task = 'denoising',

methods = my_methods,

parameters=my_parameters,

N = 256,

SNRin = [10,20,30],

repetitions = 15,

signal_ids=['LinearChirp', 'CosChirp',],

verbosity=0,

parallelize=False,

write_log=True,

)

benchmark.run() # Run the benchmark.

benchmark.save_to_file('saved_benchmark') # Save the benchmark to a file.

Running benchmark...

100%|██████████| 3/3 [00:00<00:00, 37.00it/s]

100%|██████████| 3/3 [00:00<00:00, 48.16it/s]

[28]:

True

[29]:

benchmark = Benchmark.load_benchmark('saved_benchmark')

benchmark.log

[29]:

[]

The experiments have been repeated for every combination of parameters, listed in the second column of the table as Parameter.

[30]:

results_df = benchmark.get_results_as_df() # This formats the results on a DataFrame

results_df

[30]:

| Method | Parameter | Signal_id | Repetition | 10 | 20 | 30 | |

|---|---|---|---|---|---|---|---|

| 30 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 0 | 11.886836 | 22.377151 | 31.089159 |

| 31 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 1 | 12.722813 | 22.764552 | 31.669619 |

| 32 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 2 | 12.397020 | 22.369574 | 30.790403 |

| 33 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 3 | 11.548922 | 22.221890 | 30.926058 |

| 34 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 4 | 12.083445 | 22.151975 | 30.564808 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 325 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 10 | 2.917067 | 14.576784 | 25.606940 |

| 326 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 11 | 3.818644 | 15.059165 | 25.779403 |

| 327 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 12 | 3.175252 | 14.396559 | 25.338387 |

| 328 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 13 | 2.950446 | 14.897105 | 23.843373 |

| 329 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 14 | 4.757054 | 15.854162 | 25.568327 |

180 rows × 7 columns

Generating plots with the Results Interpreter.

[31]:

# Summary interactive plots with Plotly and a report.

from plotly.offline import iplot

import plotly.io as pio

pio.renderers.default = "plotly_mimetype+notebook"

interpreter = ResultsInterpreter(benchmark)

interpreter.save_report(bars=True)

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '12.02' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '22.37' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '32.04' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '12.03' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '22.05' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

/home/juan/Nextcloud/benchmark_standalone/mcsm-benchs/mcsm_benchs/ResultsInterpreter.py:343: FutureWarning:

Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '30.91' has dtype incompatible with float64, please explicitly cast to a compatible dtype first.

[31]:

True

[32]:

figs = interpreter.get_summary_plotlys(results_df,bars=True)

for fig in figs:

iplot(fig)

Checking elapsed time for each method

[33]:

df = interpreter.elapsed_time_summary()

df

[33]:

| Average time (s) | Std | |

|---|---|---|

| CosChirp-Method 1-((np.float64(1.0),), {'fun': 'hard'}) | 0.000194 | 0.000038 |

| CosChirp-Method 1-((np.float64(2.0),), {'fun': 'hard'}) | 0.000170 | 0.000015 |

| CosChirp-Method 1-((np.float64(3.0),), {'fun': 'hard'}) | 0.000173 | 0.000016 |

| CosChirp-Method 2-((np.float64(1.0),), {'fun': 'soft'}) | 0.000180 | 0.000009 |

| CosChirp-Method 2-((np.float64(2.0),), {'fun': 'soft'}) | 0.000213 | 0.000050 |

| CosChirp-Method 2-((np.float64(3.0),), {'fun': 'soft'}) | 0.000197 | 0.000021 |

| LinearChirp-Method 1-((np.float64(1.0),), {'fun': 'hard'}) | 0.000171 | 0.000017 |

| LinearChirp-Method 1-((np.float64(2.0),), {'fun': 'hard'}) | 0.000165 | 0.000013 |

| LinearChirp-Method 1-((np.float64(3.0),), {'fun': 'hard'}) | 0.000167 | 0.000015 |

| LinearChirp-Method 2-((np.float64(1.0),), {'fun': 'soft'}) | 0.000227 | 0.000054 |

| LinearChirp-Method 2-((np.float64(2.0),), {'fun': 'soft'}) | 0.000193 | 0.000022 |

| LinearChirp-Method 2-((np.float64(3.0),), {'fun': 'soft'}) | 0.000179 | 0.000021 |

[34]:

interpreter.rearrange_data_frame()

[34]:

| SNRin | Method | Parameter | Signal_id | Repetition | QRF | |

|---|---|---|---|---|---|---|

| 0 | 10 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 0 | 11.886836 |

| 1 | 10 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 1 | 12.722813 |

| 2 | 10 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 2 | 12.397020 |

| 3 | 10 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 3 | 11.548922 |

| 4 | 10 | Method 1 | ((np.float64(1.0),), {'fun': 'hard'}) | CosChirp | 4 | 12.083445 |

| ... | ... | ... | ... | ... | ... | ... |

| 535 | 30 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 10 | 25.606940 |

| 536 | 30 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 11 | 25.779403 |

| 537 | 30 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 12 | 25.338387 |

| 538 | 30 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 13 | 23.843373 |

| 539 | 30 | Method 2 | ((np.float64(3.0),), {'fun': 'soft'}) | LinearChirp | 14 | 25.568327 |

540 rows × 6 columns